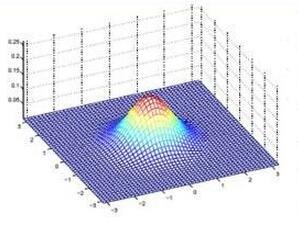

This paper is concerned with the inverse medium problem of determining the location and shape of penetrable scattering objects from measurements of the scattered field. We study a sampling indicator function for recovering the scattering object in a fast and robust way. A flexibility of this indicator function is that it is applicable to data measured in near-field regime or far-field regime. The implementation of the function is simple and does not involve solving any ill-posed problems. The resolution analysis and stability estimate of the indicator function are investigated using the factorization analysis of the far-field operator along with the Funk-Hecke formula. The performance of the method is verified on both simulated and experimental data.

相關內容

The conic bundle implementation of the spectral bundle method for large scale semidefinite programming solves in each iteration a semidefinite quadratic subproblem by an interior point approach. For larger cutting model sizes the limiting operation is collecting and factorizing a Schur complement of the primal-dual KKT system. We explore possibilities to improve on this by an iterative approach that exploits structural low rank properties. Two preconditioning approaches are proposed and analyzed. Both might be of interest for rank structured positive definite systems in general. The first employs projections onto random subspaces, the second projects onto a subspace that is chosen deterministically based on structural interior point properties. For both approaches theoretic bounds are derived for the associated condition number. In the instances tested the deterministic preconditioner provides surprisingly efficient control on the actual condition number. The results suggest that for large scale instances the iterative solver is usually the better choice if precision requirements are moderate or if the size of the Schur complemented system clearly exceeds the active dimension within the subspace giving rise to the cutting model of the bundle method.

Neural networks are the state-of-the-art for many approximation tasks in high-dimensional spaces, as supported by an abundance of experimental evidence. However, we still need a solid theoretical understanding of what they can approximate and, more importantly, at what cost and accuracy. One network architecture of practical use, especially for approximation tasks involving images, is convolutional (residual) networks. However, due to the locality of the linear operators involved in these networks, their analysis is more complicated than for generic fully connected neural networks. This paper focuses on sequence approximation tasks, where a matrix or a higher-order tensor represents each observation. We show that when approximating sequences arising from space-time discretisations of PDEs we may use relatively small networks. We constructively derive these results by exploiting connections between discrete convolution and finite difference operators. Throughout, we design our network architecture to, while having guarantees, be similar to those typically adopted in practice for sequence approximation tasks. Our theoretical results are supported by numerical experiments which simulate linear advection, the heat equation, and the Fisher equation. The implementation used is available at the repository associated to the paper.

In this work, we focus on the inverse medium scattering problem (IMSP), which aims to recover unknown scatterers based on measured scattered data. Motivated by the efficient direct sampling method (DSM) introduced in [23], we propose a novel direct sampling-based deep learning approach (DSM-DL)for reconstructing inhomogeneous scatterers. In particular, we use the U-Net neural network to learn the relation between the index functions and the true contrasts. Our proposed DSM-DL is computationally efficient, robust to noise, easy to implement, and able to naturally incorporate multiple measured data to achieve high-quality reconstructions. Some representative tests are carried out with varying numbers of incident waves and different noise levels to evaluate the performance of the proposed method. The results demonstrate the promising benefits of combining deep learning techniques with the DSM for IMSP.

In the big data era researchers face a series of problems. Even standard approaches/methodologies, like linear regression, can be difficult or problematic with huge volumes of data. Traditional approaches for regression in big datasets may suffer due to the large sample size, since they involve inverting huge data matrices or even because the data cannot fit to the memory. Proposed approaches are based on selecting representative subdata to run the regression. Existing approaches select the subdata using information criteria and/or properties from orthogonal arrays. In the present paper we improve existing algorithms providing a new algorithm that is based on D-optimality approach. We provide simulation evidence for its performance. Evidence about the parameters of the proposed algorithm is also provided in order to clarify the trade-offs between execution time and information gain. Real data applications are also provided.

Integer linear programming models a wide range of practical combinatorial optimization problems and has significant impacts in industry and management sectors. This work develops the first standalone local search solver for general integer linear programming validated on a large heterogeneous problem dataset. We propose a local search framework that switches in three modes, namely Search, Improve, and Restore modes, and design tailored operators adapted to different modes, thus improve the quality of the current solution according to different situations. For the Search and Restore modes, we propose an operator named tight move, which adaptively modifies variables' values trying to make some constraint tight. For the Improve mode, an efficient operator lift move is proposed to improve the quality of the objective function while maintaining feasibility. Putting these together, we develop a local search solver for integer linear programming called Local-ILP. Experiments conducted on the MIPLIB dataset show the effectiveness of our solver in solving large-scale hard integer linear programming problems within a reasonably short time. Local-ILP is competitive and complementary to the state-of-the-art commercial solver Gurobi and significantly outperforms the state-of-the-art non-commercial solver SCIP. Moreover, our solver establishes new records for 6 MIPLIB open instances.

We propose fast and communication-efficient optimization algorithms for multi-robot rotation averaging and translation estimation problems that arise from collaborative simultaneous localization and mapping (SLAM), structure-from-motion (SfM), and camera network localization applications. Our methods are based on theoretical relations between the Hessians of the underlying Riemannian optimization problems and the Laplacians of suitably weighted graphs. We leverage these results to design a collaborative solver in which robots coordinate with a central server to perform approximate second-order optimization, by solving a Laplacian system at each iteration. Crucially, our algorithms permit robots to employ spectral sparsification to sparsify intermediate dense matrices before communication, and hence provide a mechanism to trade off accuracy with communication efficiency with provable guarantees. We perform rigorous theoretical analysis of our methods and prove that they enjoy (local) linear rate of convergence. Furthermore, we show that our methods can be combined with graduated non-convexity to achieve outlier-robust estimation. Extensive experiments on real-world SLAM and SfM scenarios demonstrate the superior convergence rate and communication efficiency of our methods.

We present an alternative to reweighting techniques for modifying distributions to account for a desired change in an underlying conditional distribution, as is often needed to correct for mis-modelling in a simulated sample. We employ conditional normalizing flows to learn the full conditional probability distribution from which we sample new events for conditional values drawn from the target distribution to produce the desired, altered distribution. In contrast to common reweighting techniques, this procedure is independent of binning choice and does not rely on an estimate of the density ratio between two distributions. In several toy examples we show that normalizing flows outperform reweighting approaches to match the distribution of the target.We demonstrate that the corrected distribution closes well with the ground truth, and a statistical uncertainty on the training dataset can be ascertained with bootstrapping. In our examples, this leads to a statistical precision up to three times greater than using reweighting techniques with identical sample sizes for the source and target distributions. We also explore an application in the context of high energy particle physics.

Discrete Differential Equations (DDEs) are functional equations that relate polynomially a power series $F(t,u)$ in $t$ with polynomial coefficients in a "catalytic" variable $u$ and the specializations, say at $u=1$, of $F(t,u)$ and of some of its partial derivatives in $u$. DDEs occur frequently in combinatorics, especially in map enumeration. If a DDE is of fixed-point type then its solution $F(t,u)$ is unique, and a general result by Popescu (1986) implies that $F(t,u)$ is an algebraic power series. Constructive proofs of algebraicity for solutions of fixed-point type DDEs were proposed by Bousquet-M\'elou and Jehanne (2006). Bostan et. al (2022) initiated a systematic algorithmic study of such DDEs of order 1. We generalize this study to DDEs of arbitrary order. First, we propose nontrivial extensions of algorithms based on polynomial elimination and on the guess-and-prove paradigm. Second, we design two brand-new algorithms that exploit the special structure of the underlying polynomial systems. Last, but not least, we report on implementations that are able to solve highly challenging DDEs with a combinatorial origin.

Stratification in both the design and analysis of randomized clinical trials is common. Despite features in automated randomization systems to re-confirm the stratifying variables, incorrect values of these variables may be entered. These errors are often detected during subsequent data collection and verification. Questions remain about whether to use the mis-reported initial stratification or the corrected values in subsequent analyses. It is shown that the likelihood function resulting from the design of randomized clinical trials supports the use of the corrected values. New definitions are proposed that characterize misclassification errors as `ignorable' and `non-ignorable'. Ignorable errors may depend on the correct strata and any other modeled baseline covariates, but they are otherwise unrelated to potential treatment outcomes. Data management review suggests most misclassification errors are arbitrarily produced by distracted investigators, so they are ignorable or at most weakly dependent on measured and unmeasured baseline covariates. Ignorable misclassification errors may produce a small increase in standard errors, but other properties of the planned analyses are unchanged (e.g., unbiasedness, confidence interval coverage). It is shown that unbiased linear estimation in the absence of misclassification errors remains unbiased when there are non-ignorable misclassification errors, and the corresponding confidence intervals based on the corrected strata values are conservative.

GAN inversion aims to invert a given image back into the latent space of a pretrained GAN model, for the image to be faithfully reconstructed from the inverted code by the generator. As an emerging technique to bridge the real and fake image domains, GAN inversion plays an essential role in enabling the pretrained GAN models such as StyleGAN and BigGAN to be used for real image editing applications. Meanwhile, GAN inversion also provides insights on the interpretation of GAN's latent space and how the realistic images can be generated. In this paper, we provide an overview of GAN inversion with a focus on its recent algorithms and applications. We cover important techniques of GAN inversion and their applications to image restoration and image manipulation. We further elaborate on some trends and challenges for future directions.