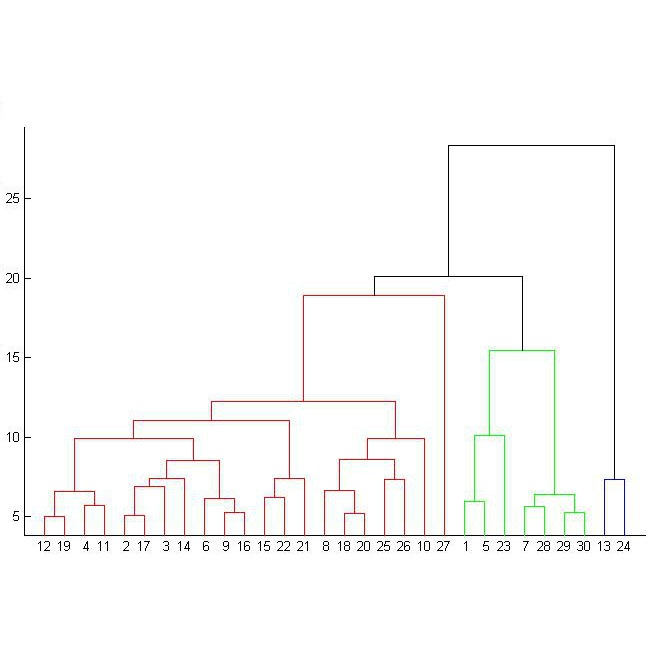

A rank-invariant clustering of variables is introduced that is based on the predictive strength between groups of variables, i.e., two groups are assigned a high similarity if the variables in the first group contain high predictive information about the behaviour of the variables in the other group and/or vice versa. The method presented here is model-free, dependence-based and does not require any distributional assumptions. Various general invariance and continuity properties are investigated, with special attention to those that are beneficial for the agglomerative hierarchical clustering procedure. A fully non-parametric estimator is considered whose excellent performance is demonstrated in several simulation studies and by means of real-data examples.

相關內容

Composite quantile regression has been used to obtain robust estimators of regression coefficients in linear models with good statistical efficiency. By revealing an intrinsic link between the composite quantile regression loss function and the Wasserstein distance from the residuals to the set of quantiles, we establish a generalization of the composite quantile regression to the multiple-output settings. Theoretical convergence rates of the proposed estimator are derived both under the setting where the additive error possesses only a finite $\ell$-th moment (for $\ell > 2$) and where it exhibits a sub-Weibull tail. In doing so, we develop novel techniques for analyzing the M-estimation problem that involves Wasserstein-distance in the loss. Numerical studies confirm the practical effectiveness of our proposed procedure.

Partial differential equations (PDEs) have become an essential tool for modeling complex physical systems. Such equations are typically solved numerically via mesh-based methods, such as finite element methods, with solutions over the spatial domain. However, obtaining these solutions are often prohibitively costly, limiting the feasibility of exploring parameters in PDEs. In this paper, we propose an efficient emulator that simultaneously predicts the solutions over the spatial domain, with theoretical justification of its uncertainty quantification. The novelty of the proposed method lies in the incorporation of the mesh node coordinates into the statistical model. In particular, the proposed method segments the mesh nodes into multiple clusters via a Dirichlet process prior and fits Gaussian process models with the same hyperparameters in each of them. Most importantly, by revealing the underlying clustering structures, the proposed method can provide valuable insights into qualitative features of the resulting dynamics that can be used to guide further investigations. Real examples are demonstrated to show that our proposed method has smaller prediction errors than its main competitors, with competitive computation time, and identifies interesting clusters of mesh nodes that possess physical significance, such as satisfying boundary conditions. An R package for the proposed methodology is provided in an open repository.

Dynamical low-rank approximation has become a valuable tool to perform an on-the-fly model order reduction for prohibitively large matrix differential equations. A core ingredient is the construction of integrators that are robust to the presence of small singular values and the resulting large time derivatives of the orthogonal factors in the low-rank matrix representation. Recently, the robust basis-update & Galerkin (BUG) class of integrators has been introduced. These methods require no steps that evolve the solution backward in time, often have favourable structure-preserving properties, and allow for parallel time-updates of the low-rank factors. The BUG framework is flexible enough to allow for adaptations to these and further requirements. However, the BUG methods presented so far have only first-order robust error bounds. This work proposes a second-order BUG integrator for dynamical low-rank approximation based on the midpoint rule. The integrator first performs a half-step with a first-order BUG integrator, followed by a Galerkin update with a suitably augmented basis. We prove a robust second-order error bound which in addition shows an improved dependence on the normal component of the vector field. These rigorous results are illustrated and complemented by a number of numerical experiments.

We investigate pointwise estimation of the function-valued velocity field of a second-order linear SPDE. Based on multiple spatially localised measurements, we construct a weighted augmented MLE and study its convergence properties as the spatial resolution of the observations tends to zero and the number of measurements increases. By imposing H\"older smoothness conditions, we recover the pointwise convergence rate known to be minimax-optimal in the linear regression framework. The optimality of the rate in the current setting is verified by adapting the lower bound ansatz based on the RKHS of local measurements to the nonparametric situation.

Open sets are central to mathematics, especially analysis and topology, in ways few notions are. In most, if not all, computational approaches to mathematics, open sets are only studied indirectly via their 'codes' or 'representations'. In this paper, we study how hard it is to compute, given an arbitrary open set of reals, the most common representation, i.e. a countable set of open intervals. We work in Kleene's higher-order computability theory, which was historically based on the S1-S9 schemes and which now has an intuitive lambda calculus formulation due to the authors. We establish many computational equivalences between on one hand the 'structure' functional that converts open sets to the aforementioned representation, and on the other hand functionals arising from mainstream mathematics, like basic properties of semi-continuous functions, the Urysohn lemma, and the Tietze extension theorem. We also compare these functionals to known operations on regulated and bounded variation functions, and the Lebesgue measure restricted to closed sets. We obtain a number of natural computational equivalences for the latter involving theorems from mainstream mathematics.

Consistency models, which were proposed to mitigate the high computational overhead during the sampling phase of diffusion models, facilitate single-step sampling while attaining state-of-the-art empirical performance. When integrated into the training phase, consistency models attempt to train a sequence of consistency functions capable of mapping any point at any time step of the diffusion process to its starting point. Despite the empirical success, a comprehensive theoretical understanding of consistency training remains elusive. This paper takes a first step towards establishing theoretical underpinnings for consistency models. We demonstrate that, in order to generate samples within $\varepsilon$ proximity to the target in distribution (measured by some Wasserstein metric), it suffices for the number of steps in consistency learning to exceed the order of $d^{5/2}/\varepsilon$, with $d$ the data dimension. Our theory offers rigorous insights into the validity and efficacy of consistency models, illuminating their utility in downstream inference tasks.

In 2012 Chen and Singer introduced the notion of discrete residues for rational functions as a complete obstruction to rational summability. More explicitly, for a given rational function f(x), there exists a rational function g(x) such that f(x) = g(x+1) - g(x) if and only if every discrete residue of f(x) is zero. Discrete residues have many important further applications beyond summability: to creative telescoping problems, thence to the determination of (differential-)algebraic relations among hypergeometric sequences, and subsequently to the computation of (differential) Galois groups of difference equations. However, the discrete residues of a rational function are defined in terms of its complete partial fraction decomposition, which makes their direct computation impractical due to the high complexity of completely factoring arbitrary denominator polynomials into linear factors. We develop a factorization-free algorithm to compute discrete residues of rational functions, relying only on gcd computations and linear algebra.

Mendelian randomization (MR) is an instrumental variable (IV) approach to infer causal relationships between exposures and outcomes with genome-wide association studies (GWAS) summary data. However, the multivariable inverse-variance weighting (IVW) approach, which serves as the foundation for most MR approaches, cannot yield unbiased causal effect estimates in the presence of many weak IVs. To address this problem, we proposed the MR using Bias-corrected Estimating Equation (MRBEE) that can infer unbiased causal relationships with many weak IVs and account for horizontal pleiotropy simultaneously. While the practical significance of MRBEE was demonstrated in our parallel work (Lorincz-Comi (2023)), this paper established the statistical theories of multivariable IVW and MRBEE with many weak IVs. First, we showed that the bias of the multivariable IVW estimate is caused by the error-in-variable bias, whose scale and direction are inflated and influenced by weak instrument bias and sample overlaps of exposures and outcome GWAS cohorts, respectively. Second, we investigated the asymptotic properties of multivariable IVW and MRBEE, showing that MRBEE outperforms multivariable IVW regarding unbiasedness of causal effect estimation and asymptotic validity of causal inference. Finally, we applied MRBEE to examine myopia and revealed that education and outdoor activity are causal to myopia whereas indoor activity is not.

The availability of data is limited in some fields, especially for object detection tasks, where it is necessary to have correctly labeled bounding boxes around each object. A notable example of such data scarcity is found in the domain of marine biology, where it is useful to develop methods to automatically detect submarine species for environmental monitoring. To address this data limitation, the state-of-the-art machine learning strategies employ two main approaches. The first involves pretraining models on existing datasets before generalizing to the specific domain of interest. The second strategy is to create synthetic datasets specifically tailored to the target domain using methods like copy-paste techniques or ad-hoc simulators. The first strategy often faces a significant domain shift, while the second demands custom solutions crafted for the specific task. In response to these challenges, here we propose a transfer learning framework that is valid for a generic scenario. In this framework, generated images help to improve the performances of an object detector in a few-real data regime. This is achieved through a diffusion-based generative model that was pretrained on large generic datasets, and is not trained on the task-specific domain. We validate our approach on object detection tasks, specifically focusing on fishes in an underwater environment, and on the more common domain of cars in an urban setting. Our method achieves detection performance comparable to models trained on thousands of images, using only a few hundreds of input data. Our results pave the way for new generative AI-based protocols for machine learning applications in various domains, for instance ranging from geophysics to biology and medicine.

Multi-product formulas (MPF) are linear combinations of Trotter circuits offering high-quality simulation of Hamiltonian time evolution with fewer Trotter steps. Here we report two contributions aimed at making multi-product formulas more viable for near-term quantum simulations. First, we extend the theory of Trotter error with commutator scaling developed by Childs, Su, Tran et al. to multi-product formulas. Our result implies that multi-product formulas can achieve a quadratic reduction of Trotter error in 1-norm (nuclear norm) on arbitrary time intervals compared with the regular product formulas without increasing the required circuit depth or qubit connectivity. The number of circuit repetitions grows only by a constant factor. Second, we introduce dynamic multi-product formulas with time-dependent coefficients chosen to minimize a certain efficiently computable proxy for the Trotter error. We use a minimax estimation method to make dynamic multi-product formulas robust to uncertainty from algorithmic errors, sampling and hardware noise. We call this method Minimax MPF and we provide a rigorous bound on its error.